Our Insights

Through a multitude of client engagements, across several years, and within a wide peer group of consulting professionals, Kenway has developed several key insights and core principles that we believe are integral to the success of any endeavor in the data space

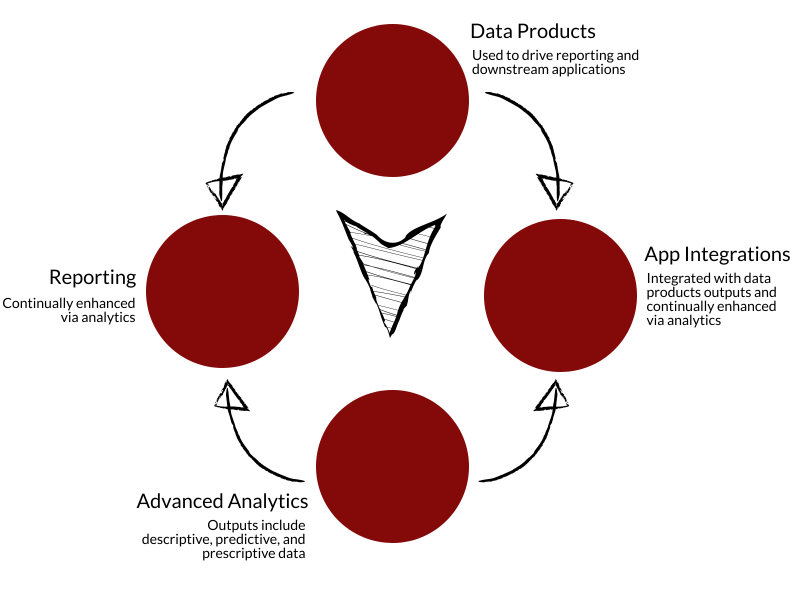

Create Product Mindset

Data integration technologies facilitate the supply and consumption of data (in various forms) to data products.

Simplify Infrastructure

Choose PaaS offerings for the entire stack where possible. Solutions where pipeline tools are on-premises and data storage on the cloud are problematic, with increased latency data travel. Additionally, on-premise solutions are often complex to maintain from a skills and cost standpoint.

Simplify architecture

Build simple data pipelines to support data domains and use cases. Avoid duplication of data which fulfills similar purposes across the solution. Build upfront mechanisms to ensure development of reports and pipelines are utilizing production data.

Unwind dependencies

Ensure that discrete operations are adequately decoupled (i.e., archival, masking, standardizing, etc.). Keep data models and domains decoupled until business requirements necessitate it by utilizing a single warehouse with multiple schemas or data marts.