Unlocking Efficiency: Generative AI’s Role in Contact Center Innovation

Introduction

Throughout the past year, Kenway Consultants have been deeply involved in orchestrating the buildout of a Contact Center as a Service (CCaaS) implementation at a major telecom provider in the United States. Our team has played critical roles on the program such as defining the customer experience for each unique self-service offering and expanding on the functionality of their existing IVA. Kenway continues to successfully bridge the gap to the business while working closely with AI Architects, Developers, and technical teams. In this blog, we will share our insights, key challenges faced during the implementation of Generative AI, and the steps taken to overcome these obstacles.

In 2023, more than 25% of all investment dollars in American startups were channeled into AI-focused companies. Global spending on AI for 2024 is projected to exceed $110 billion. Much of this investment is geared towards Generative AI, which saw unprecedented innovation in the last year. Implementation in the real world has spanned across industries, including Technology, Media, Telecom, and Financial Services, due to the clear alignment of use cases in those industries.

The Time-Intensive Problem

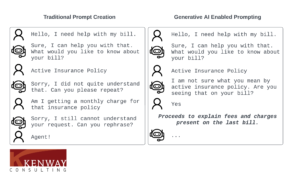

Before diving into the insights gained by implementing Generative AI in the Contact Center space, we will expand on some of the problems with traditional prompt creation and routing to further emphasize the importance of Generative AI. The first piece is the time intensive nature of the traditional flow or prompt creation. Before Developers can build a self-service experience, a myriad of requirements needs to be discussed and documented. Business Analysts define the requirements, conversational architects will take those requirements and build a visual flow representing the experience, content writers adjust and approve all language that a customer will hear, technical teams conduct data mapping to configure the technical solution, and so on.

Despite this level of attention to detail, certain use cases and edge cases will fall through the gaps and will only be discovered once the experience is live in production. This feeds into the second piece that all callers hear the same generically constructed content, offering a less personal, more robotic experience. Furthermore, customer utterances deemed a “No-Match”, or a “No-Input” have no path forward other than endless retries and ultimately speaking to a live agent. Traditional implementation fails to capture these use cases, as it is extremely time-consuming, and expensive, to build handling and routing for all potential utterances a real customer may provide.

Generative AI in Contact Centers

The introduction of Generative AI helps ease this pain on all fronts. Leveraging Gen AI solutions can reduce time spent throughout the Software Development Lifecycle (SDLC) by reducing the number of prompts and routing needed to deliver a particular enhancement and reducing the time spent solutioning edge cases. Technical teams can spend their time more efficiently, and superior experiences are also provided to the customer. Generative AI enables companies to provide personalized prompting for each individual caller’s needs. With the ability to leverage content from the companies’ website, existing forums, corporate databases, and more – Generative AI can offer dynamic informational prompting once trained on these materials.

Secondly, Generative AI can mimic the conversational style of the caller as it starts to use words similar to the caller’s verbiage as the call goes on, repeating it back if the system is having trouble matching the customers’ utterance to an established route or intent. This powerful feature emulates a human-to-human connection, allowing the IVA to respond as a human would.

Finally, the IVA can keep callers engaged longer with the use of Generative AI, which ultimately improves containment rates, a key metric used to gauge the performance of self-service experiences. In short, an IVA containment rate refers to the percentage of inbound calls or chats that are successfully handled without having to speak to a human agent. Higher containment rates equate to a lower volume of live agent transfers, improved agent workloads, and in parallel, a potential reduction in labor costs for the organization.

Limitations and Challenges of Generative AI Implementation

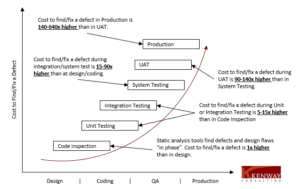

While acknowledging the benefits, the use of Generative AI poses potential drawbacks and challenges. As programs navigate from the traditional SDLC to leveraging Generative AI, the first concern is that testing (Quality Assurance, IST, and User Acceptance Testing) can be significantly more cumbersome. Generative AI provides dynamic prompting to different callers, this requires more test cases to verify the application is functioning correctly. To alleviate this stress, organizations can use automated testing platforms such as Botium, by Cyara, to test AI with AI. A powerful tool such as Botium can go through thousands of test cases in a matter of minutes. What better way to test AI, than with AI?

In the AI world, made-up values or incorrect facts are called hallucinations. These hallucinations can be caused by a variety of factors, including insufficient training data, incorrect assumptions made by the model, or biases in the data used to train the model. Due to this, there are concerns that stem from a legal and content standpoint. Generative AI may lead to the usage of verbiage or language that does not necessarily have signoff from all involved parties. In the traditional model, content writers meticulously groom each user facing prompt with legal considerations top of mind. Due to the dynamic model of Generative AI, customers may receive content that misrepresents the business and their normal communication standards. Even more concerning, there is the risk that something being communicated is inaccurate or misleading to the user, potentially opening the organization up to legal penalties. For instance, a recent article by Forbes discusses the legal ramifications of these hallucinations within the airline industry. Additionally, the Guardian outlines a recent use case in the court of law that resulted in severe repercussions for a Canadian lawyer.

Mitigation through Design

However, there are tools to mitigate these risks. The first being the appropriate selection of the Large Language Model (LLM), or AI toolset, and the data used to train the model. Establishing robust guidelines during the model training timeframe can help limit this risk downstream. The data upon which the model is trained will need to be selected carefully to avoid bias. It is important to also continuously tune and deploy the model with parameters that strike a balance between generating a human-like customer experience and one that does not stray from the confines of its knowledge base (i.e. creating hallucinations). The perpetual monitoring of the model’s performance after it has been deployed to production will further refine the output.

Secondly, leveraging automated testing tools to cover thousands of use cases and edge cases will allow for teams to identify defects further up in the DevOps lifecycle, before those defects reach production, reducing costs down the road.

Lastly, keeping the concept of Responsible AI at the forefront when designing the model guidelines will ensure these risks are further tamed. It is worth noting that the selection process for Generative AI will vary depending on the business or industry it intends to support. For example, the AI model deployed in a Contact Center at a telecom provider will vary significantly from a model used to serve an internal purpose at a Private Equity firm, or one used to send out targeted marketing campaigns to potential customers. These models can be seen as highly customizable, where the concept of ‘one size fits all’ does not apply.

Above all, the most impactful mitigation tool for AI risks is choosing the right use cases. Specifying all-encompassing use cases, creating KPIs around engagement volume, evaluating the potential severity of defects, and where the highest ROI would come from utilizing generative AI, can all be very difficult. However, by selecting appropriate use cases, most generative AI limitations can be avoided based on these decisions being made further upfront in the design process.

Moving Forward with AI in Contact Centers

It is important to reiterate that the model must have access to reliable and germane data on which it can be trained. Think of a chatbot trained on semi-biased data, potentially perpetuating stereotypes or generating offensive hallucinations. Acquiring and curating high-quality, contextually relevant data is paramount. No business function will be successful without the iterative process of testing, investigating, enhancing, and reassessing the performance of Generative or Conversational AI. This iterative approach ensures that these models not only function effectively, but also continually adapt and grow, becoming invaluable assets for businesses with aims to stay ahead of the curve.

AI is continuing to evolve and advance, the future of Generative AI is a bright, and an ever-changing horizon. At Kenway, we have built expertise over the last few years in the AI realm, and we have launched our newly formed Artificial Intelligence Practice. Our services include:

- Conversational AI

- Generative AI

- Computer Vision (Automation)

- AI Enablement & Strategy (where Responsible AI methodologies reside)

As Kenway takes on more Contact Center Implementations and seeks to expand into other industries that are now ready to realize the ROI of AI, the firm continues to design intelligently for the future, ensuring the latest methodologies and tools are deployed at our clients. To read more about our Contact Center Practice, please visit: Contact Center Solutions.

FAQs:

How important is data to being able to build an AI tool?

The need for quality and integrity of data underpinning an AI tool cannot be overstated. Across all industries, AI, especially Generative AI, requires a robust, compliant, application-specific data set without bias to produce high-quality, ROI-driving responses regardless of the specific application. Selecting the right data set can be the most important decision a firm makes when making implementation decisions.

What guidelines or frameworks should I consider when looking to implement Generative AI in my organization?

- Consider AI Ethics guidelines that cover principles such as fairness, accountability, transparency, privacy, and security.

- Consider AI Risk Management frameworks, such as NIST, that manage risks associated with AI systems throughout their lifecycle, from design to deployment and monitoring.

- Consider AI Governance frameworks that define governance structures, policies, and processes for AI systems, ensuring alignment with organizational values, risk management, and compliance.

- Consider Responsible AI Frameworks that allow AI systems to respect human rights and promote inclusiveness.

- Consider Data Privacy and Retention Regulations to ensure you continue to remain compliant with these mandates.

Our business is doing great, and we are consistently beating our revenue targets. Why do I need to invest in AI?

Making a data-driven decision on the implementation of artificial intelligence for a specific use-case can not only reduce time spent on specific workstreams, but even open doors to new ways to interact with customers, more efficient ways to manage tasks, and ensure your organization stays ahead of the curve.

How do I scale?

Before delivering an AI solution at scale across an enterprise, ensure the following criteria are met. The model must be maintainable, robust with the ability to avoid hallucinations, and executed efficiently throughout the continuous deployment cycle. The model should have the ability to integrate with core systems and provide performance monitoring to avoid any detrimental impacts on internal operations.